Everything in life is a compromise.

Including with AI.

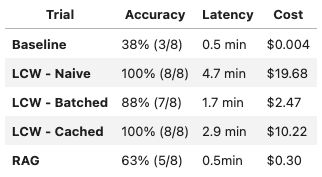

AI: long context windows (LCW) or retrieval augmented generation (RAG)?

These approaches can be used to tune the accuracy, latency and cost of each use case when asking questions.

Do you need high accuracy?

Long context window (naive or cached) could be the way forward, but it is expensive, and it takes the longest time to get an answer.

Do you want faster and less expensive answers?

You’d have to sacrifice accuracy, and I assume to still spend a fair bit to implement a RAG stack and pipeline

See further details in the table below.

Original Google’s paper link.

AI trade-offs: LCW vs. RAG for cost, speed, and accuracy

18 Jan 2025

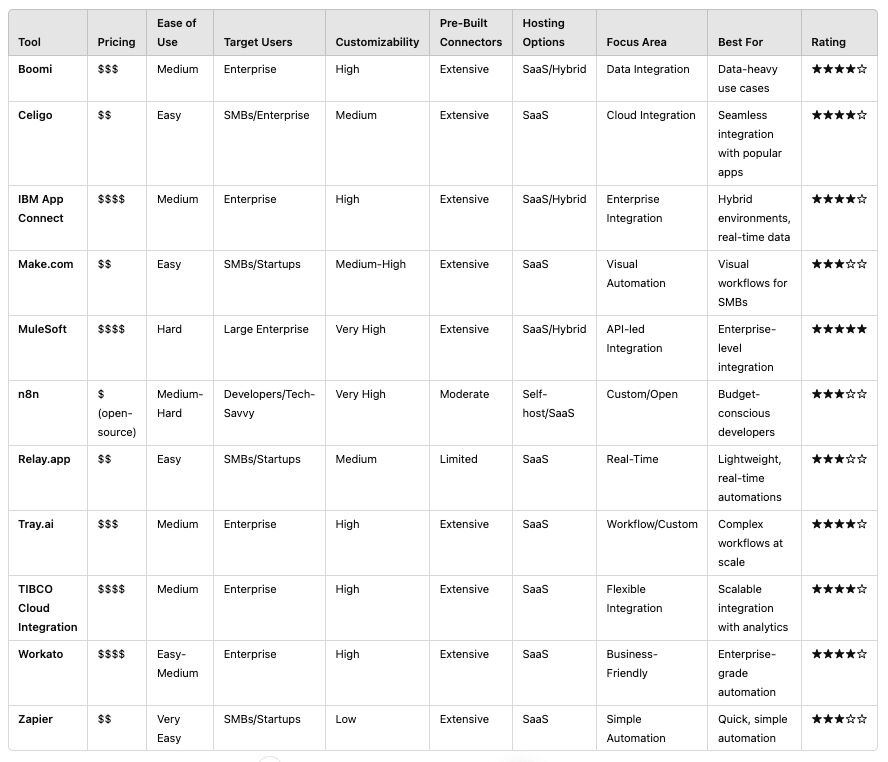

Might also be interesting

5 steps to eliminate business bottlenecks with automation

What are your biggest business bottlenecks?

5 simple steps to help you in the process.

Increase ROI with reusable iPaaS components for automation

Do you know what is the killer iPaaS app based on what we are doing?

All trademarks mentioned on this page are the property of their respective owners. The mention of any company, product or service does not imply their endorsement.